Sfumato is a pair of tools that generate CSS for your web-based projects. You can create HTML markup and use pre-defined utility class names to style the rendered markup, without actually writing any CSS code or leaving your HTML editor! The resulting HTML markup is clean, readable, and consistent. And the generated CSS file is tiny, even if it hasn't been minified!

The first tool is the command line interface (CLI), which you can install once and use on any projects. It will watch/build CSS as you work.

The second tool is a Nuget package that you can add to a compatible .NET project. And after adding a snippet of code to your startup, it will build/watch as you run your app or debug, generating CSS on the fly.

Sfumato is compatible with the Tailwind CSS v4 class naming structure so switching between either tool is possible. In addition, Sfumato has the following features:

Cross-platform — Both the Sfumato CLI tool and nuget package work on Windows, Mac, and Linux on x64 and Arm64/Apple Silicon CPUs. They're native, multi-threaded, and lightning fast! So you can build anywhere.

Low overhead — Unlike Tailwind, Sfumato doesn't rely on NodeJS or any other packages or frameworks. Your project path and source repository will not have extra files and folders to manage, and any configuration is stored in your source CSS file.

Dev platform agnostic — The Sfumato CLI works great in just about any web project:

JavaScript frameworks, like React, Angular, etc.

Hybrid mobile projects, like Blazor Hybrid, Flutter, etc.

Content Management Systems (CMS) like WordPress, Umbraco, etc.

Custom web applications built with ASP.NET, PHP, Python, etc.

Basic HTML websites

Works great with ASP.NET — Sfumato supports ASP.NET, Blazor, and other Microsoft stack projects by handling "@@" escapes in razor/cshtml markup files. So you can use arbitrary variants and container utilities like "@container" by escaping them in razor syntax (e.g. "@@container").

In addition to using the CLI tool to build and watch your project files, you can instead add the Sfumato Core Nuget package to your ASP.NET-based project to have Sfumato generate CSS as you debug, or when you build or publish.

Imported CSS files work as-is — Sfumato features can be used in imported CSS files without any modifications. It just works. Tailwind's Node.js pipeline requires additional changes to be made in imported CSS files that use Tailwind features and setup is finicky.

Better dark theme support — Unlike Tailwind, Sfumato allows you to provide "system", "light", and "dark" options in your web app without writing any JavaScript code (other than widget UI code).

Adaptive design baked in — In addition to the standard media breakpoint variants (e.g. sm, md, lg, etc.) Sfumato has adaptive breakpoints that use viewport aspect ratio for better device identification (e.g. mobi, tabp, tabl, desk, etc.).

Integrated form element styles — Sfumato includes form field styles that are class name compatible with the Tailwind forms plugin.

More colorful — The Sfumato color library provides 20 shade steps per color (values of 50-1000 in increments of 50).

More compact CSS — Sfumato combines media queries (like dark theme styles), reducing the size of the generated CSS even without minification.

Workflow-friendly — The Sfumato CLI supports redirected input for use in automation workflows.

There's usually more to the story so if you have questions or comments about this post let us know!

Do you need a new software development partner for an upcoming project? We would love to work with you! From websites and mobile apps to cloud services and custom software, we can help!

If you’re building a new PC, upgrading your desktop, or purchasing a new laptop, you’ve likely noticed one thing: memory has become very expensive. RAM prices have jumped sharply compared to just a year or two ago, and the increase isn’t slowing down.

This isn’t a simple shortage. It’s the result of long-term changes in how memory is made, who it’s made for, and how manufacturers manage supply.

AI services are consuming massive amounts of memory. These systems rely on specialized high-bandwidth memory (HBM) to train and run large models. Because HBM is far more profitable, memory manufacturers are redirecting production capacity away from traditional consumer RAM. That means fewer chips are available for the rest of us.

There are limits on raw materials. All RAM starts with advanced semiconductor wafers that are expensive and produced by only a handful of suppliers worldwide. As AI companies lock in long-term contracts, wafer availability tightens, pushing up costs for all types of memory, even before it reaches consumers.

Manufacturers are intentionally controlling supply. Memory makers are keeping production tight to avoid oversupply. This helps stabilize profits but keeps prices elevated. With all major suppliers following similar strategies, competition isn’t driving prices down. In fact, companies like Micron are exiting the consumer RAM business.

Older DDR4 memory is disappearing faster than expected. As the industry transitions to DDR5, DDR4 production is being phased out. Fewer production lines mean lower availability, which is why even older systems are seeing price increases instead of discounts.

Modern devices need more RAM. What used to be considered a pro-level amount of memory (16–32 GB) is now considered standard, adding pressure to an already tight market.

High RAM prices are expected through 2026. Industry analysts report that by the end of 2026, manufacturers will have maxed out the expansion potential of their current facilities. New factories are also being built, but large-scale relief is still years away. Short-term sales may help, but a major price drop isn’t likely soon.

There are some basic strategies that can mitigate the price increases, but they're not a panacea.

Don’t wait too long to purchase. Prices have been steadily rising month over month. If you're planning to buy, purchase ASAP.

Focus on balanced systems. More RAM isn’t always better for every workload.

Watch for special deals. Even small discounts can help in a high-price market.

Consider pre-built systems. Complete PCs and laptops typically offer better value than upgrading parts. For example, Apple's usual price gouging on memory for a new Mac is actually a steal right now. But their negotiated part prices will eventually need to be renegotiated, likely increasing costs for consumers.

RAM prices reflect a fundamental shift toward AI-driven computing, not a temporary hiccup (similar to recent GPU prices increases). While costs may eventually stabilize, buyers should plan with the assumption that memory will remain expensive. Making smart, timely purchasing decisions now can help you avoid paying even more later.

There's usually more to the story so if you have questions or comments about this post let us know!

Do you need a new software development partner for an upcoming project? We would love to work with you! From websites and mobile apps to cloud services and custom software, we can help!

We're always reading about the latest security exploit or distributed denial of service (DDoS) attack. When we do it tends to feel like something that happens to other people or businesses, and is generally a nuisance when it comes to our own lives. But could we be part of the problem?

There are a couple things you can do to determine if your devices are being used by nefarious actors to attack and compromise networks and services on the Internet. The first is to see if your network is exposing any openings that can be exploited.

The Shodan search engine is a great tool for determining if your network is exposing any open ports. Ports are like doors that allow outsiders to connect to devices within your network. There are 65,535 possible ports, some of which are standard ports used by common services. For example, web servers use ports 80 and 443 for insecure and encrypted communication respectively. Open up those ports and point them to a device on your network, and you're now hosting a public website.

This is where Shodan comes in. It allows you to search for your network IP to see what's open. You need to sign up to use the filters (it's free), but it's a one-step process if you simply sign in using a Google account. Then in the search bar you can search your IP address like this:

ip:73.198.169.112If you have exposed ports you'll see information about which ports and protocols are available. You can even search a subnet, representing a range of IP addresses, like this:

ip:73.198.169.0/24 This IP address has an RTSP service port open to the world.

This IP address has an RTSP service port open to the world.

Suffice it to say that most people shouldn't have any results available. If you do, make sure the open port is supposed to be open. For example, if you're hosting a Minecraft server or website.

Another great, free, and simple tool is the GreyNoise IP Check. Visit the website and it will scan its massive botnet database to see if your IP address has ever been used in a distributed attack.

If your computer has been hacked and is under the control of someone on the Internet, they may use it (and your Internet connection) to participate in a distributed denial of service (DDoS) attack. That's when a large number of computers on the Internet make requests for a website at once, overwhelming the server and effectively taking it offline. These attacks are used for various reasons, like ransom demands.

GreyNoise watches the Internet's background radiation—the constant storm of scanners, bots, and probes hitting every IP address on Earth. They've cataloged billions of these interactions to answer one critical question: is this IP a real threat or just internet noise? Security teams trust their data to cut through the chaos and focus on what actually matters.

There's usually more to the story so if you have questions or comments about this post let us know!

Do you need a new software development partner for an upcoming project? We would love to work with you! From websites and mobile apps to cloud services and custom software, we can help!

DUID is a fully-featured replacement for GUIDs (Globally Unique Identifiers). They are more compact, web-friendly, and provide more entropy than GUIDs. We created this UUID type as a replacement for GUIDs in our projects, improving on several GUID shortcomings.

We pronounce it doo-id, but it can also be pronounced like dude, which is by design :)

You can use DUIDs as IDs for user accounts and database records, in JWTs, as unique code entity (e.g. variable) names, and more. They're an ideal replacement for GUIDs that need to be used in web scenarios.

Uses the latest .NET cryptographic random number generator

More entropy than GUID v4 (128 bits vs 122 bits)

No embedded timestamp (reduces predictability and improves strength)

Self-contained; does not use any packages

High performance, with minimal allocations

16 bytes in size; 22 characters as a string

Always starts with a letter (can be used as-is for programming language variable names)

URL-safe

Can be validated, parsed, and compared

Can be created from and converted to byte arrays

UTF-8 encoding support

JSON serialization support

TypeConverter support

Debug support (displays as string in the debugger)

Yes, you can also find DUID on nuget. Look for the package named fynydd.duid.

Similar to Guid.NewGuid(), you can generate a new DUID by calling the static NewDuid() method:

This will produce a new DUID, for example: aZ3x9Kf8LmN2QvW1YbXcDe. There are a ton of overloads and extension methods for converting, validating, parsing, and comparing DUIDs.

Here are some examples:

There is also a JSON converter for System.Text.Json that provides seamless serialization and deserialization of DUIDs:

There's usually more to the story so if you have questions or comments about this post let us know!

Do you need a new software development partner for an upcoming project? We would love to work with you! From websites and mobile apps to cloud services and custom software, we can help!

An Amazon Web Services (AWS) outage shut down a good portion of the Internet on Monday (October 20, 2025), affecting websites, apps, and services. Updates are still being released, but here's what we know so far.

At 3:00am on Monday morning there was a problem with one of the core AWS database products (DynamoDb), which knocked many of the leading apps, streaming services, and websites offline for millions of users across the globe.

The problem seems to have originated at one of the main AWS data centers in Ashburn, Virginia following a software update to the DynamoDB API, which is a cloud database service used by online platforms to store user and app data. This area of Virginia is known colloquially as Data Center Alley because it has the world's largest concentration of data centers.

The specific issue appears to be related to an error that occurred in the update which affected DynamoDb DNS. Domain Name Systems (DNS) are used to route domain name requests (e.g. fynydd.com) to their correct server IP addresses (e.g. 1.2.3.4). Since the service's domain names couldn't be matched with server IP addresses, the DynamoDb service itself could not be reached. Any apps or services that rely on DynamoDb would then experience intermittent connectivity issues or even complete outages.

Hundreds of companies have likely been affected worldwide. Some notable examples include:

Amazon

Apple TV

Chime

DoorDash

Fortnite

Hulu

Microsoft Teams

The New York Times

Netflix

Ring

Snapchat

T-Mobile

Verizon

Venmo

Zoom

In a statement, the company said: “All AWS Services returned to normal operations at 3:00pm. Some services such as AWS Config, Redshift, and Connect continue to have a backlog of messages that they will finish processing over the next few hours.”

This is not the first large-scale AWS service disruption. More recently outages occurred in 2021 and 2023 which left customers unable to access airline tickets and payment apps. And this will undoubtedly not be the last.

Most of the Internet is serviced by a handful of cloud providers which offer scalability, flexibility, and cost savings for businesses around the world. Amazon provides these services for close to 30% of the Internet. So when it comes to the reality that future updates can fail or break key infrastructure, the best that these service providers can do is ensure customer data integrity and have a solid remediation and failover process.

There's usually more to the story so if you have questions or comments about this post let us know!

Do you need a new software development partner for an upcoming project? We would love to work with you! From websites and mobile apps to cloud services and custom software, we can help!

Intel Panther Lake uses the new 18A node process

Intel Panther Lake uses the new 18A node process

Intel’s Fab 52 chip fabrication facility in Chandler, Arizona is now producing chips using Intel’s new 18A process, which is the company’s most advanced 2 nanometer-class technology. The designation “18A” signals a paradigm shift. Because each nanometer equals 10 angstroms, “18A” implies a roughly 18 angstrom (1.8nm) process, marking the beginning of atomic-scale design, at least from a marketing perspective.

So what else is new with the 18A series?

18A introduces Intel’s RibbonFET, its first gate-all-around (GAA) transistor design. By wrapping the gate entirely around the channel, it achieves superior control, lower leakage, and higher performance-per-watt than FinFETs. Intel claims about 15% better efficiency than its prior Intel 3 node.

Apple is said to be including TSMC's 3D stacking technology in their upcoming M5 series of chips as well.

Equally transformative is PowerVIA, Intel’s new way of routing power from the back of the wafer. Traditional front-side power routing competes with signal wiring and limits transistor density. By moving power connections underneath, PowerVIA frees up routing space and can boost density by up to 30%. Intel is the first to deploy this at scale. TSMC and Samsung are still preparing their equivalents.

Intel plans 18A variants like 18A-P (performance) and 18A-PT (optimized for stacked packaging), positioning the node as both a production platform and a foundry offering for external customers. It’s the test case for Intel’s ambition to rejoin the leading edge of contract manufacturing.

18A isn’t just a smaller process. It’s a conceptual threshold. It unites two generational shifts: a new transistor architecture (GAA) and a new physical paradigm (backside power). Together, they allow scaling beyond what classic planar or FinFET approaches can achieve. In doing so, Intel is proving that progress no longer depends solely on shrinking dimensions. It’s about re-architecting how power and signals flow at the atomic level.

Intel’s own next-generation CPUs, such as Panther Lake, will debut on 18A, validating the process before wider foundry use. If yields and performance hold up, 18A could cement Intel’s comeback and signal that the angstrom era is truly here.

For the broader industry, 18A is more than a marketing pivot. It’s the bridge from nanometers to atoms... a demonstration that semiconductor engineering has entered a new domain where every angstrom counts.

There's usually more to the story so if you have questions or comments about this post let us know!

Do you need a new software development partner for an upcoming project? We would love to work with you! From websites and mobile apps to cloud services and custom software, we can help!

AI prompts that reveal insight, bias, blind spots, or non-obvious reasoning are typically called “high-leverage prompts”. These types of prompts have always intrigued me more than any other, primarily because they focus on questions that were difficult or impossible to answer before we had large language models. I'm going to cover a few to get your creative juices flowing. This post isn't a tutorial about prompt engineering (syntax, structure, etc.) it's just an exploration in some ways to prompt AI that you may not have considered.

This one originally came to me from a friend who owns the digital marketing agency Arc Intermedia. I've made my own flavor of it, but it's still focused on the same goal: since potential customers will undoubtedly look you up in an AI tool, what will the tool tell them?

If someone decided not to hire {company name}, what are the most likely rational reasons they’d give, and which of those can be fixed? Focus specifically on {company name} as a company, its owners, its services, customer feedback, former employee reviews, and litigation history. Think harder on this.I would also recommend using a similar prompt to research your company's executives to get a complete picture. For example:

My name is {full name} and I am {job title} at {company}. Analyze how my public profiles (LinkedIn, Github, social networks, portfolio, posts, etc.) make me appear to an outside observer. What story do they tell, intentionally or not?This prompt is really helpful when you need to decide whether or not to respond to a prospective client's request for proposal (RFP). These responses are time consuming (and costly) to do right. And when a prospect is required to use the RFP process but already has a vendor chosen, it's an RFP you want to avoid.

What are the signs a {company name} RFP is quietly written for a pre-selected service partner? Include sources like reviews, posts, and known history of this behavior in your evaluation. Think harder on this but keep the answer brief.People looking for work run into a few roadblocks. One is a ghost job posted only to make the company appear like it's growing or otherwise thriving. Another is a posting for a job that is really for an internal candidate. Compliance may require the posting, but it's not worth your time.

What are the signs a company’s job posting is quietly written for an internal candidate?Another interesting angle a job-seeker can explore are signs that a company is moving into a new vertical or working on a new product or service. In those cases it's helpful to tailor your resume to fit their future plans.

Analyze open job listings, GitHub commits, blog posts, conference talks, recent patents, and press hints to infer what {company name} is secretly building. How should that change my resume below?

{resume text}You'll see all kinds of wild scientific/medical/technical claims on the Internet, usually with very little nuance or citation. A great way to begin verifying a claim is by using a simple prompt like the one below.

Stress-test the claim ‘{Claim}’. Pull meta-analyses, preprints, replications, and authoritative critiques. Separate mechanism-level evidence from population outcomes. Where do credible experts disagree and why?Even if you're a seasoned professional, it's easy to get lost in jargon as new terms are coined for emerging technologies, services, medical conditions, laws, policies, and more. Below is a simple prompt to help you keep up on the latest terms and acronyms in a particular industry.

Which terms of art or acronyms have emerged in the last 12 months around {technology/practice}? Build a glossary with first-sighting dates and primary sources.There's usually more to the story so if you have questions or comments about this post let us know!

Do you need a new software development partner for an upcoming project? We would love to work with you! From websites and mobile apps to cloud services and custom software, we can help!

In highly regulated industries such as pharmaceuticals, medical devices, and biotech; training records and learning systems are more than just business tools — they are part of an organization’s compliance infrastructure. For companies subject to FDA regulations, ensuring that training platforms meet 21 CFR Part 11 requirements isn’t optional — it’s mission-critical.

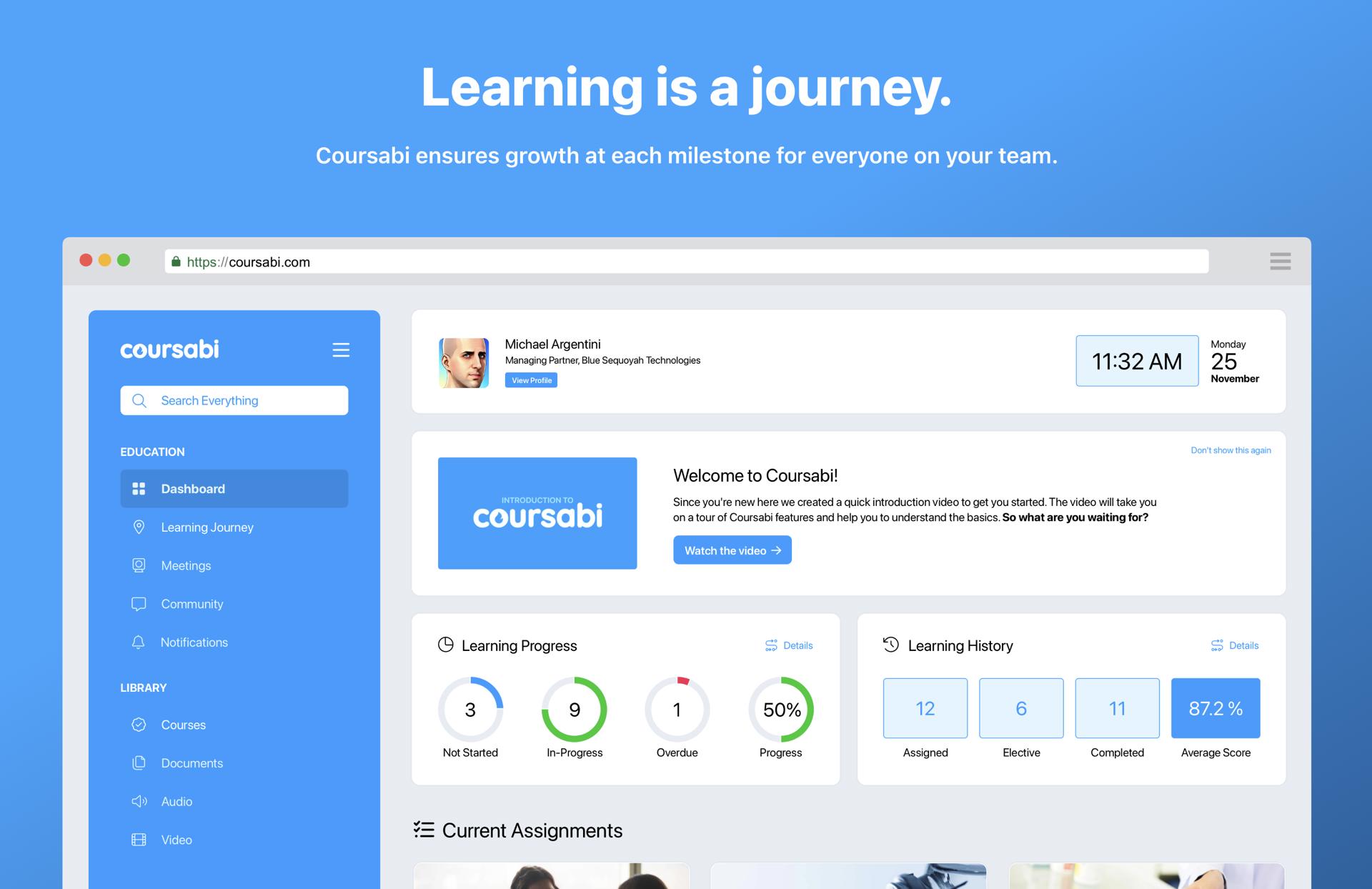

Recently, our team, working with Cloudrise Inc and IvyRock LLC, successfully completed the process of validating our SaaS Learning Management System, Coursabi, to be compliant with 21 CFR Part 11. It was a journey that combined technical diligence, regulatory understanding, and close collaboration with quality experts. Here’s how we did it — and what we learned along the way.

21 CFR Part 11 is the FDA regulation that sets the standard for electronic records and electronic signatures. It ensures that electronic systems used in regulated environments are trustworthy, reliable, and equivalent to paper records.

For a learning management system, this means:

Secure access controls

Audit trails for all training and record changes

Electronic signature validation

Data integrity and backup safeguards

System validation to prove it works as intended

Without compliance, training records in such industries could be deemed invalid — a risk no regulated company can afford.

Our first step was to thoroughly understand what 21 CFR Part 11 compliance meant for our LMS. While our platform already had robust security and reporting features, the regulation required specific documented controls and formal validation evidence.

We worked closely with compliance consultants and industry experts to translate the regulation’s language into actionable technical and procedural requirements.

Working with Cloudrise, we followed the standard validation methodology:

Master Validation Plan (MVP):

User Requirements Specification (URS):

System Configuration Specification (SCS)

Installation Qualification (IQ): Verified that the LMS was installed correctly in our SaaS environment, with all necessary dependencies and security configurations in place.

Operational Qualification (OQ): Tested each functional requirement — from login authentication to audit trail accuracy — against the regulation’s criteria.

Performance Qualification (PQ): Confirmed that the LMS performed consistently in real-world use cases over time.

Every test step was scripted, executed, and documented with results, screenshots, and approvals.

21 CFR Part 11 compliance isn’t just about software — it’s about how the system is used, updated and managed. We worked primarily with IvyRock LLC, to update several of our Standard Operating Procedures (SOPs) for:

HR-Employee Training Policy and Log

HR-Electronic Signatures Policy (submission letter FDA indicating our acceptance)

Q-Policy Format and Preparation Maintenance and Control of Polices

Q-Good Documentation Practices Policy

Q-Document Control Policy

Q-Change Control Policy

Q-Deviations Policy

Q-CAPAs Policy

SW-Version Control Policy

SW-Software Release Policy

SW-Computer System Validation Policy

SW-Coursabi Mission Control Administration Policy

SW-Coursabi Use and Operation Policy

S-Business Continuity Plan/Policy (specific to Coursabi)

These SOPs ensure that compliance is maintained long after the validation project is complete.

Our final deliverable was a Validation Summary Report, which tied together:

The validation plan

Test results

Deviations and resolutions

Final compliance statement

With this report approved, we could officially state that our LMS is validated and 21 CFR Part 11 compliant.

Validation is a team effort — involving developers, quality experts, and end-users.

Documentation is as important as the software itself — if it’s not documented, it didn’t happen.

Compliance is ongoing — system updates, infrastructure changes, and new features require periodic re-validation.

For organizations in regulated industries, using our LMS means they can:

Confidently train employees in a compliant environment

Pass FDA audits with complete, trustworthy training records

Save time and resources by leveraging a validated SaaS solution instead of building one from scratch

Working through the 21 CFR Part 11 validation process for our SaaS LMS was a challenging but rewarding experience. It pushed us to elevate our technical controls, strengthen our documentation, and embed compliance into the very DNA of our platform.

Now, our customers in regulated industries can focus on what matters most — delivering high-quality products and services — knowing their training system meets the highest compliance standards.

There's usually more to the story so if you have questions or comments about this post let us know!

Do you need a new software development partner for an upcoming project? We would love to work with you! From websites and mobile apps to cloud services and custom software, we can help!

Consider this... is a recurring feature where we pose a provocative question and share our thoughts on the subject. We may not have answers, or even suggestions, but we will have a point of view, and hopefully make you think about something you haven't considered.

As more people use AI to create content, and AI platforms are trained on that content, how will that impact the quality of digital information over time?

It looks like we're kicking off this recurring feature with a mind bending exercise in recursion, thus the title reference to Ouroboros, the snake eating its own tail. Let's start with the most common sources of information that AI platforms use for training.

Books, articles, research papers, encyclopedias, documentation, and public forums

High-quality, licensed content that isn’t freely available to the public

Domain-specific content (e.g. programming languages, medical texts)

These represent the most common (and likely the largest) corpora that will contain AI generated or influenced information. And they're the most likely to increase in breadth and scope over time.

Training on these sources is a double edged sword. Good training content will be reinforced over time, but likewise, junk and erroneous content will be too. Complicating things, as the training set increases in size, it becomes exponentially more difficult to validate. But hey, we can use AI to do that. Can't we?

Here's another thing to think about: bad actors (e.g., geopolitical adversaries) are already poisoning training data through massive disinformation campaigns. According to Carnegie Mellon University Security and Privacy Institute: “Modern AI systems that are trained to understand language are trained on giant crawls of the internet,” said Daphne Ippolito, assistant professor at the Language Technologies Institute. “If an adversary can modify 0.1 percent of the Internet, and then the Internet is used to train the next generation of AI, what sort of bad behaviors could the adversary introduce into the new generation?”

We're scratching the surface here. This topic will certainly become more prominent in years to come. And tackling these issues is already a priority for AI companies. As Nature and others have determined, "AI models collapse when trained on recursively generated data." We dealt with similar issues when the Internet boom first enabled wide scale plagiarism and an easy path to bad information. AI has just amplified the issue through convenience and the assumption of correctness. As I wrote in a previous AI post, in spite of how helpful AI tools can be, the memes of AI fails may yet save us by educating the public on just how often AI is wrong, and that it doesn't actually think in the first place.

There's usually more to the story so if you have questions or comments about this post let us know!

Do you need a new software development partner for an upcoming project? We would love to work with you! From websites and mobile apps to cloud services and custom software, we can help!

Cloud storage services, like Google Cloud Firestore, are a common solution for scalable website and app data storage. But sometimes there are compliance mandates that require data services to be segregated, self-hosted, or otherwise provide enhanced security. There are also performance benefits when your data service is on the same subnet as your website or API. This is why we built the Datoids data service.

The Datoids data service is a standalone platform that can be hosted on Linux, macOS, or Windows. It uses Microsoft SQL Server as the database engine, and provides a gRPC API that is super fast because commands and data transfers are binary streams sent over an HTTP/2 connection. In addition to read/update/store functionality, it also provides a freetext search. We've been using it in production environments with great success.

Although the API can be used to completely control the platform, Datoids also includes a separate web management interface. It provides a way to configure collections, API keys, and even browse and search data, and add/edit/delete items. We've embedded Microsoft's simple but powerful Monaco editor (the same one used for VS Code) for editing data.

The architecture is clean. Projects are organizational structures like folders in a file system. Collections act like spreadsheets (or tables in SQL parlance) filled with your data. There are also service accounts that are used to access the data from your website or app.

To make using it as easy as possible, we built a .NET client package that can be included in any .NET project, so that using Datoids requires no knowledge of gRPC or HTTP/2, since reading and storing data is done using models or anonymous types.

Getting a value from Datoids is simple:

Likewise, storing data is just as easy.

You can also modify data without replacing the entire object.

There are plenty of other ways to read and write date as well, combining primary key and native query options. You can even perform bulk transactions.

If you have a website or app platform that needs a robust and performant data service, let us know! We can provide a demo and answer any questions.

There's usually more to the story so if you have questions or comments about this post let us know!

Do you need a new software development partner for an upcoming project? We would love to work with you! From websites and mobile apps to cloud services and custom software, we can help!